Vipul Nair

Hello

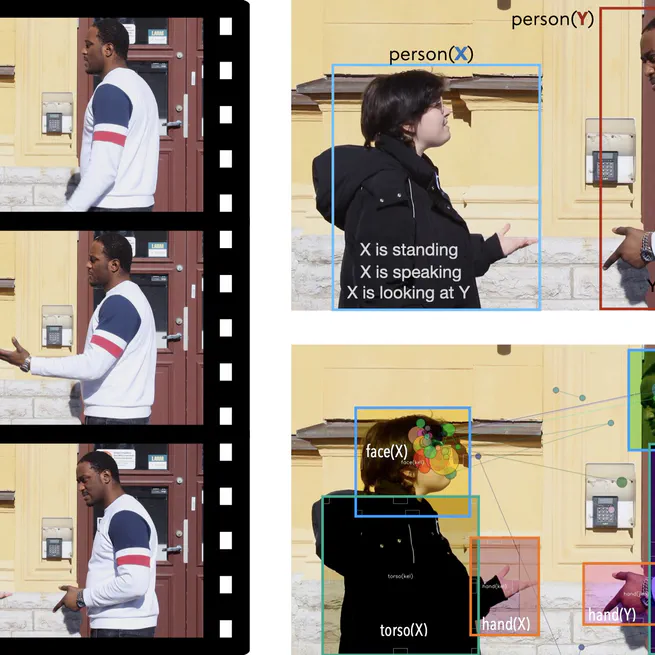

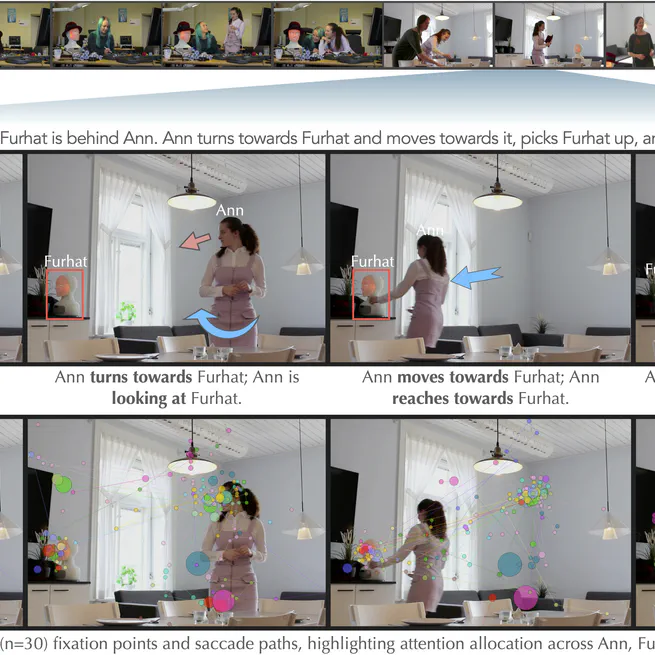

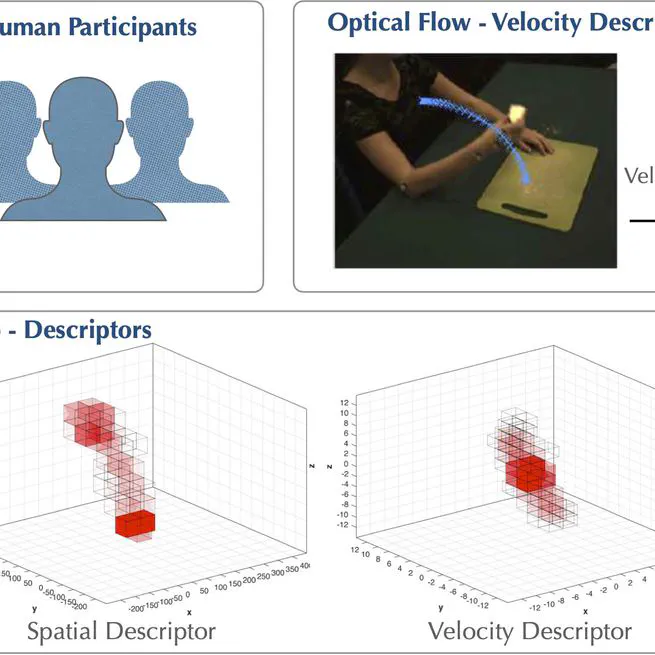

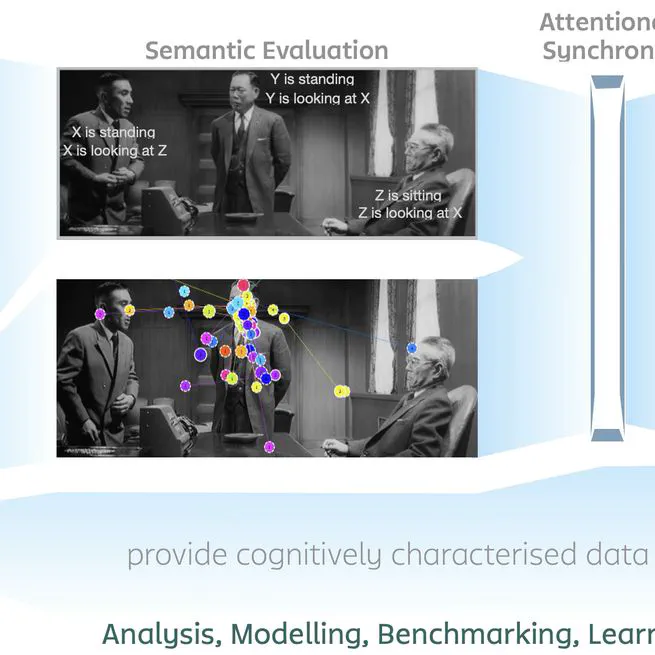

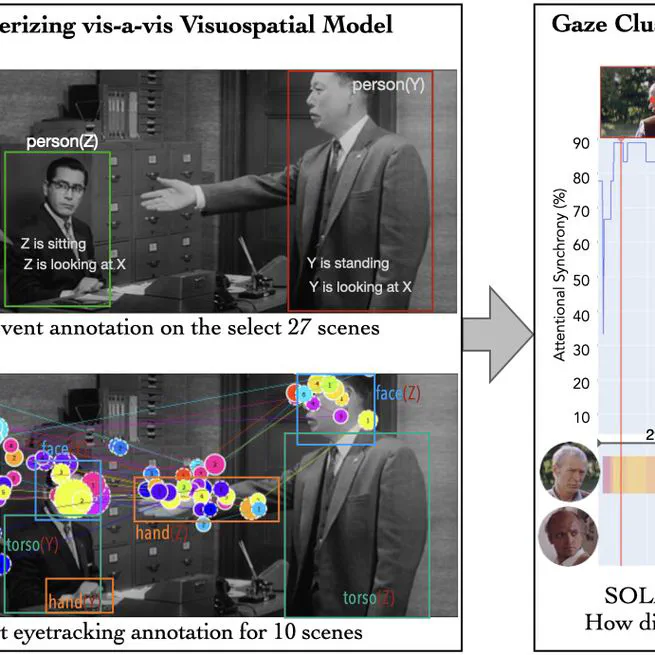

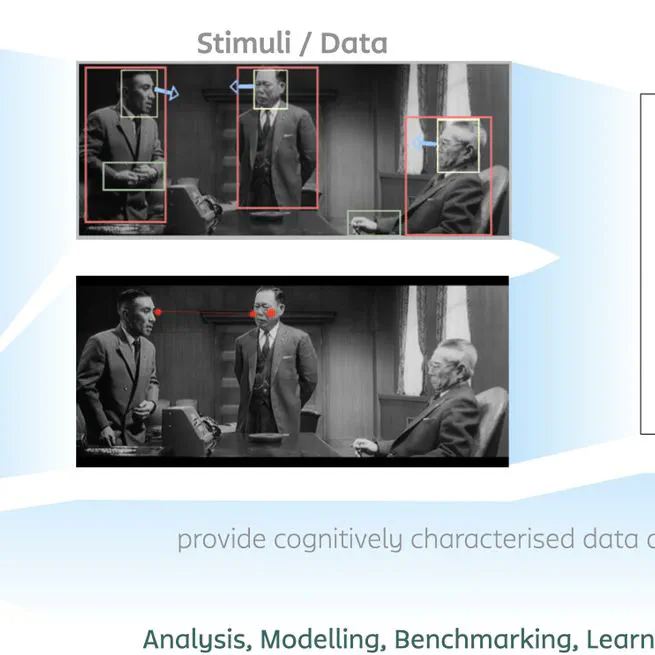

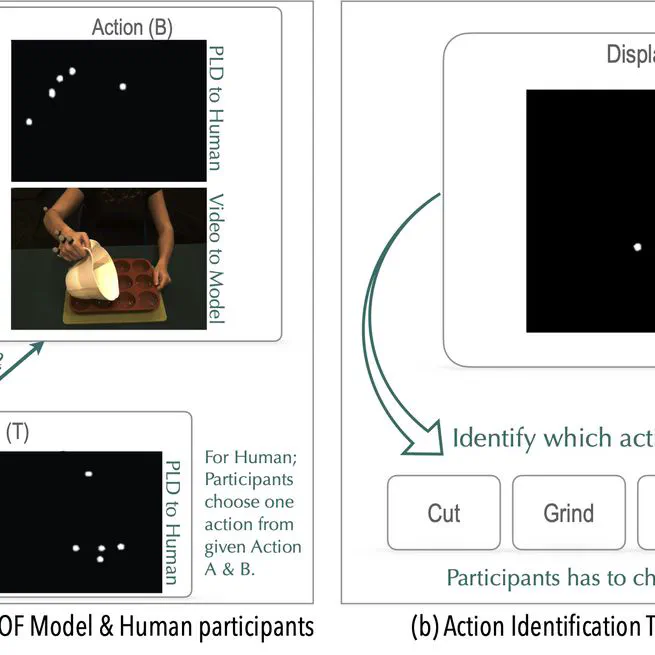

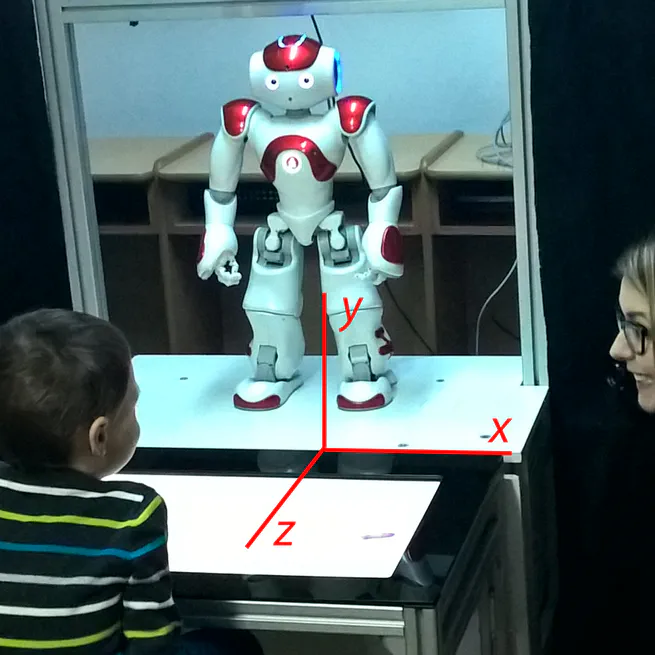

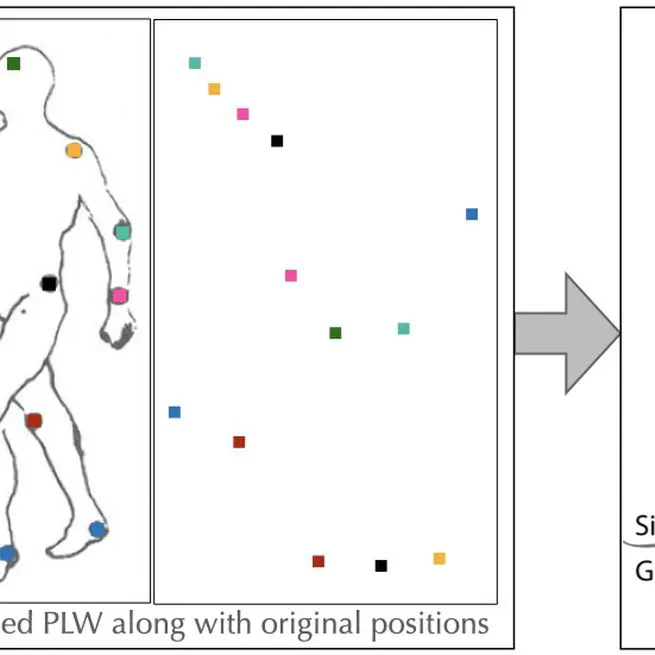

I’m an Informatics researcher, bridging cognitive science, AI, and human-centered technology to study how people process sensory information in real-world interactions. With 8+ years of designing eye-tracking, motion capture, and mixed-methods studies, I advance behavior research through publications, datasets, and global collaborations — turning complex insights into intuitive solutions.