A multimodal analysis of visuo-auditory & spatial cues and their role in guiding human attention during real-world social interactions.

April 1, 2024

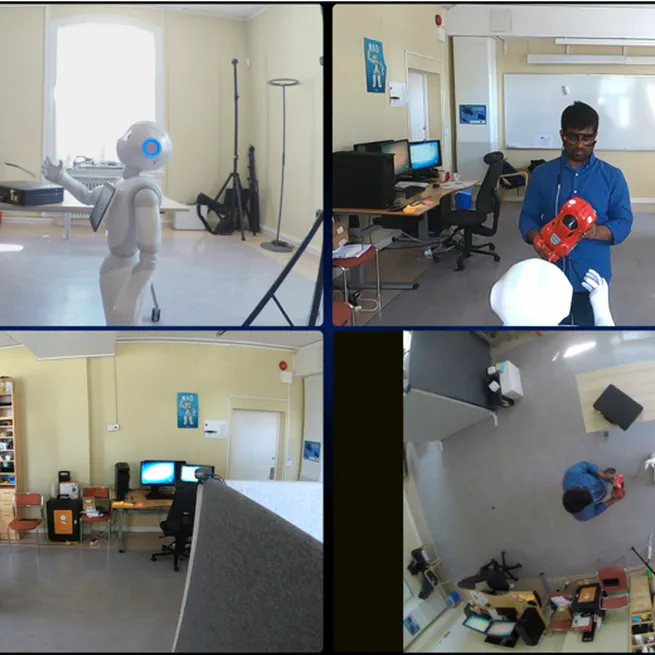

Pilot study on how visuoauditory robot behaviors influence human attention and intention understanding during collaborative tasks.

January 31, 2023

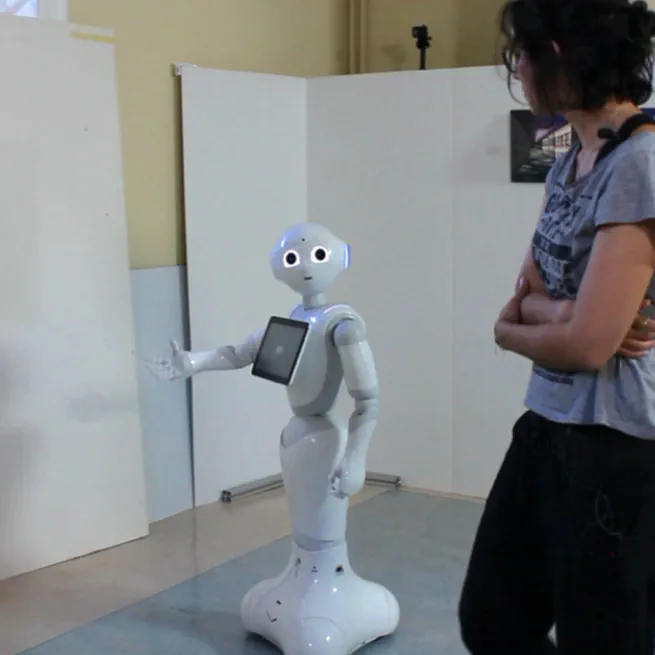

Designing multimodal interaction scenarios to investigate joint attention mechanisms in human-robot museum guidance contexts.

July 1, 2022

A collaborative, open-science audiovisual production for cognitive and behavioral research.

May 31, 2022

Analyzing how visuospatial features guide collective viewer attention using eye-tracking and semantic modeling.

December 31, 2021

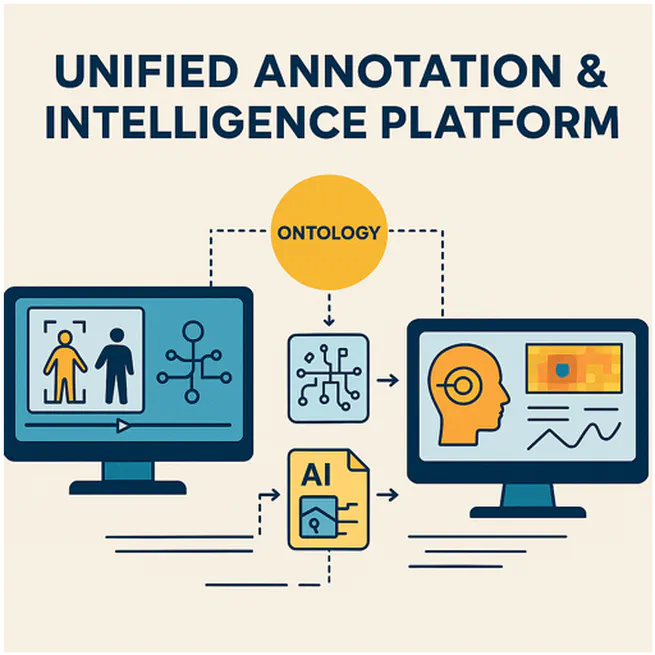

A knowledge-driven pipeline for cross-domain event annotation and visual attention analysis

January 1, 2021

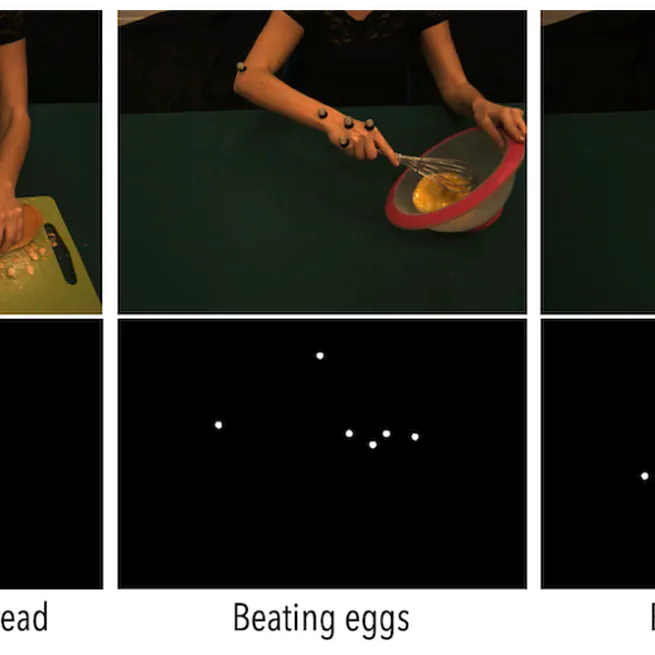

Exploring how humans and computational models perceive similarity in everyday hand actions using motion features.

June 30, 2020

Python-based multimodal data processing for the EU Horizon 2020 DREAM project on robot-assisted autism therapy.

March 31, 2019

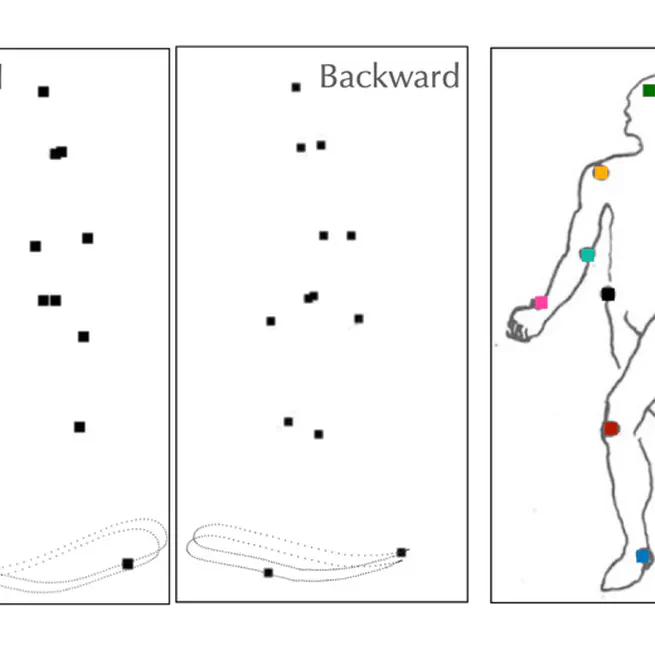

How local motion kinematics and global form contribute to biological motion perception, studied via point-light walker experiments.

January 31, 2019

How eye-tracking neuroscience transformed brand engagement for luxury retail clients

February 1, 2017

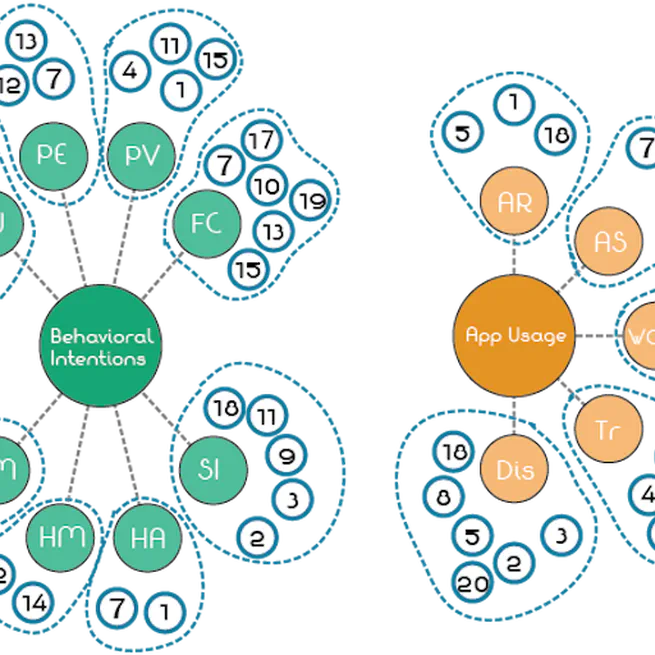

Comprehensive research-driven consultation to increase discovery, engagement, and usage for a location-based community platform.

November 30, 2016

Investigating how virtual driving affects perceived body scale and affordance judgments in real-world aperture navigation.

April 30, 2016

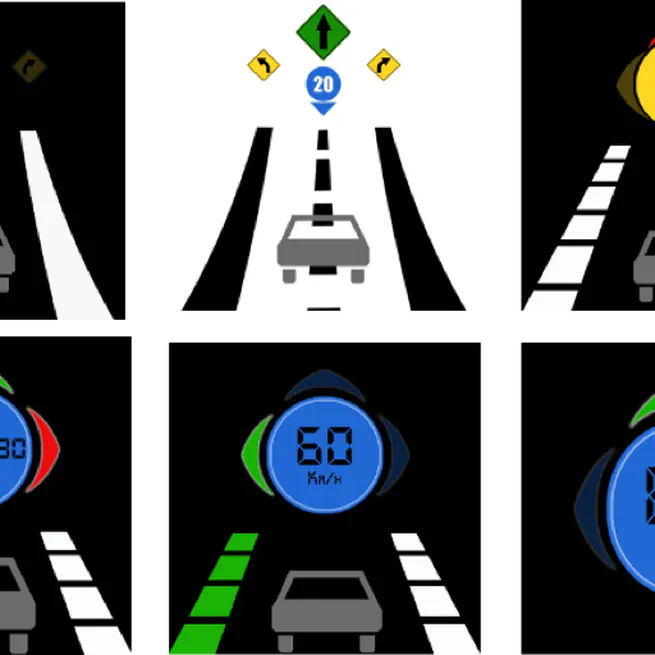

Designing communication strategies and assistive systems to optimize traffic flow in shared spaces by understanding driver behavior and urban movement patterns.

November 30, 2015

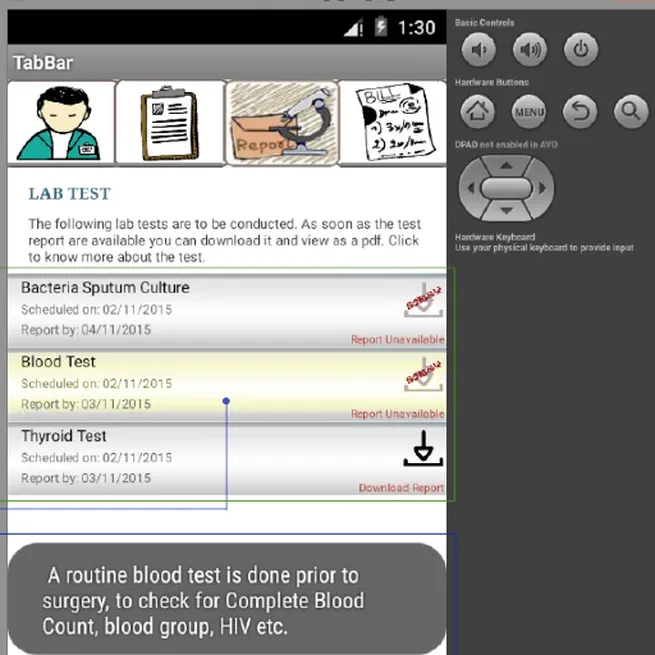

Designing an Android app that enhances transparency between hospitals and patients’ families by integrating patient information into the Hospital Information System (HIS).

July 31, 2015

Exploring the feasibility of Oculus Rift for depth-based cognitive experiments

April 30, 2015