Event Segmentation Through the Lens of Multimodal Interaction

September 17, 2021·

,

,

,

,

,

,

Vipul Nair

Jakob Suchan

Mehul Bhatt

Paul Hemeren

Abstract

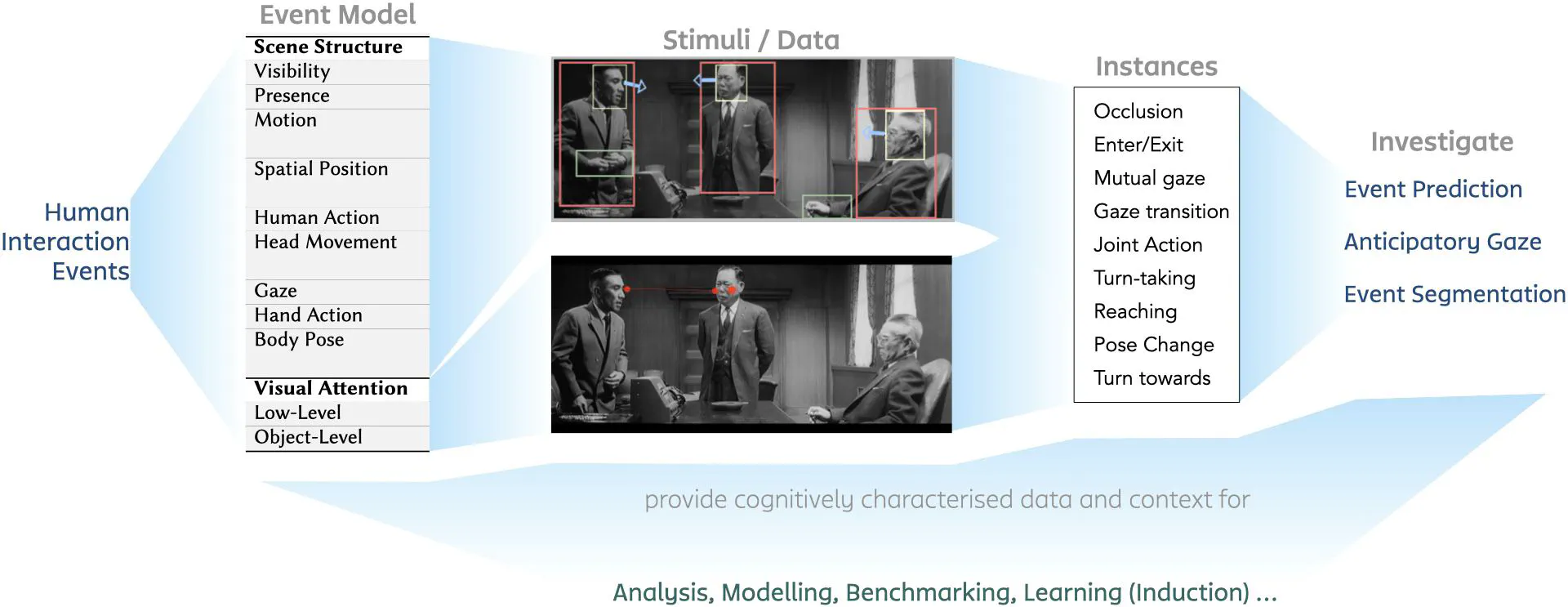

This research explores how multimodal cues—such as gaze, speech, motion, and body posture—influence how humans perceive and segment events in everyday interactions. Focusing on visuoauditory narrative media, we analyzed 25 movie scenes through a detailed event ontology and eye-tracking data from 32 viewers per scene. Early findings reveal patterns like attentional synchrony and gaze pursuit, offering insights into how people predict and understand unfolding events. Our cognitive model has broad applications in narrative media design, cognitive film studies, and human-robot interaction.

Type

Publication

In 8th International Conference on Spatial Cognition - Cognition and Action in a Plurality of Spaces (ICSC 2021)