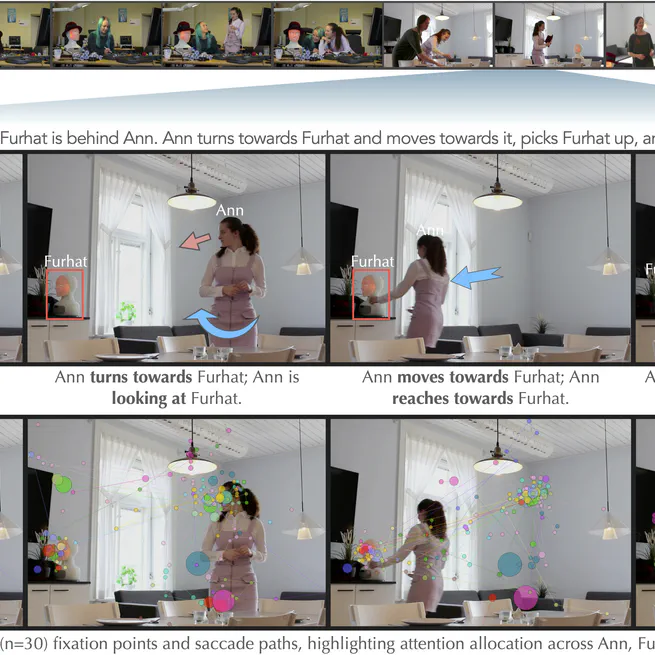

This naturalistic multimodal dataset captures 27 everyday interaction scenarios with detailed visuo-spatial & auditory cue annotations and eye-tracking data, offering rich insights for research in human interaction, attention modeling, and cognitive media studies.

May 1, 2025

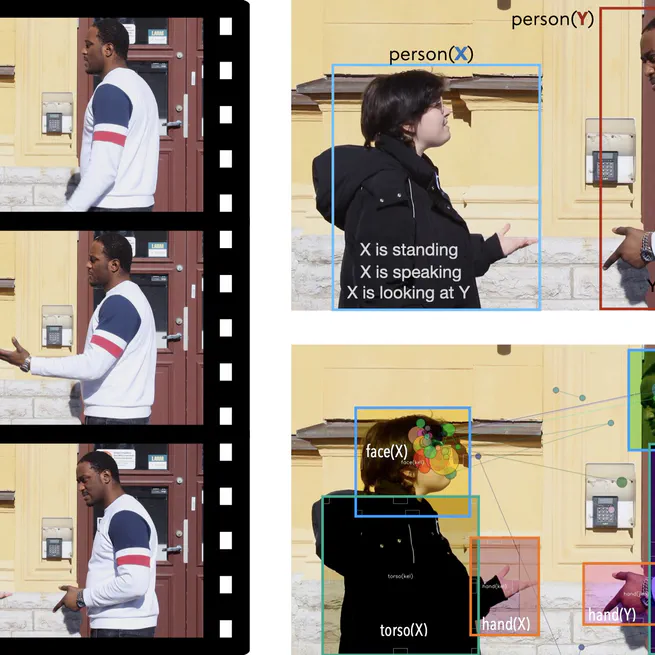

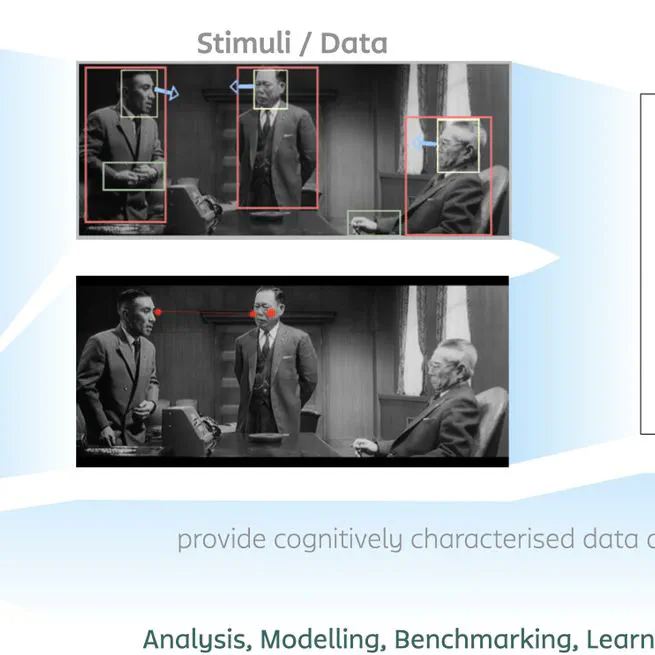

We explore how cues like gaze, speech, and motion guide visual attention in observing everyday interactions, revealing cross-modal patterns through eye-tracking data and structured event analysis.

January 30, 2025

A multimodal analysis of visuo-auditory & spatial cues and their role in guiding human attention during real-world social interactions.

April 1, 2024

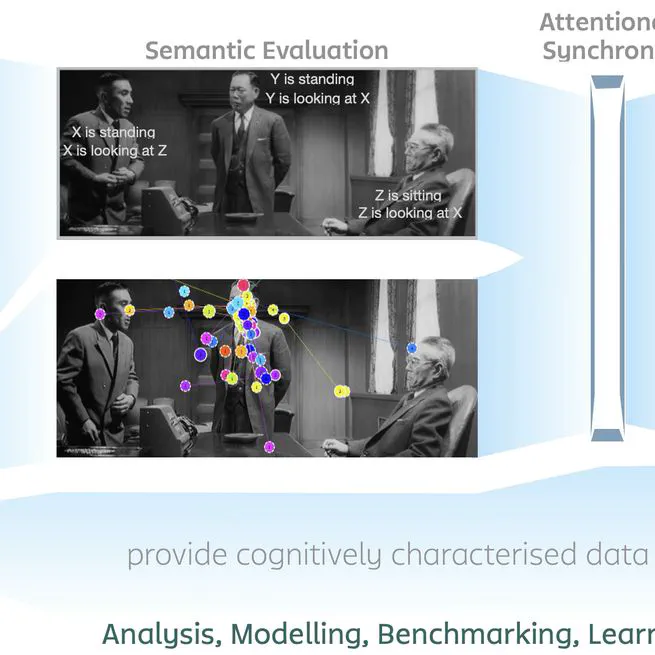

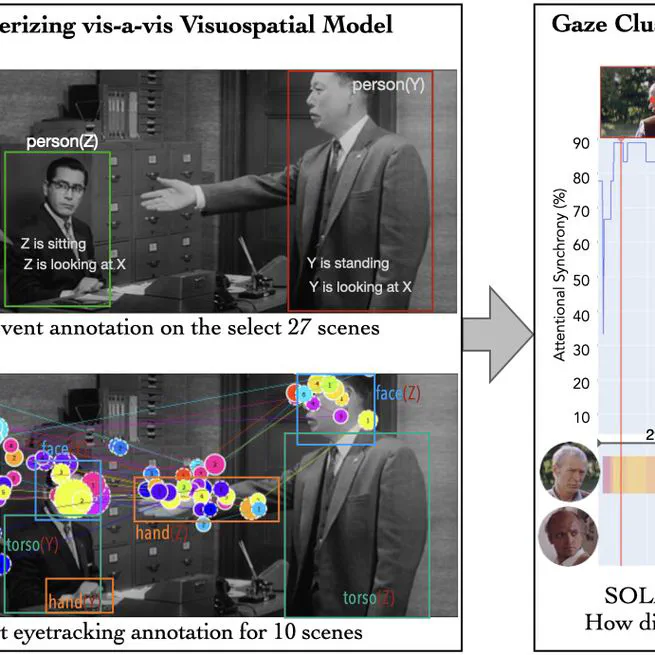

This study analyzes how visuospatial features in film scenes shape attentional synchrony, using eye-tracking data to reveal how scene complexity guides visual attention during event perception.

September 22, 2022

This study explores how visuospatial features in film scenes—like occlusion or movement—relate to anticipatory gaze and event segmentation, using eye-tracking data and multimodal analysis to uncover patterns in human event understanding.

June 1, 2022

Analyzing how visuospatial features guide collective viewer attention using eye-tracking and semantic modeling.

December 31, 2021

This research develops a conceptual cognitive model to examine how multimodal cues—like gaze, motion, and speech—influence event segmentation and prediction in narrative media, using detailed scene analysis and eye-tracking data from naturalistic movie viewing.

September 17, 2021

How eye-tracking neuroscience transformed brand engagement for luxury retail clients

February 1, 2017